Keywords: PR curve, ROC curve, Machine Learning, image processing

為了幫助大家了解,比如我們需要檢測一個圖像中的人,分類器将圖像上的每個像素劃分為人和非人像素,目标是人,是以檢測為人的像素用Positives表示,檢測為非人的像素用Negatives來表示,檢測到了需要報告,檢測到不是需要拒絕,檢測到了實際不是則為誤報(錯誤地報告)或錯檢(非目标當成目标了),該檢測到的沒有檢測到則為漏報或漏檢

- True/False = 正确地/錯誤地

-

Positives/Negatives = 識别為目标/識别為非目标

這樣就容易了解了:

- True positives (TP) = 正确地被識别為目标 的像素 (正确檢出)

- False positives (FP) = 錯誤地被識别為目标 的像素(錯檢,報多了)

- True negatives (TN) = 正确地被識别為非目标 的像素 (正确拒絕)

- False negatives (FN) = 錯誤地被識别為非目标 的像素 (漏檢,報少了)

這些參數是常用的評估方法的基礎。通過這些參數的值我們可以計算出ROC空間和PR空間的一個點,多個圖像就可以得到多個點,連成曲線就是所謂的ROC曲線和PR曲線。

-

ROC空間(面向真實結果Gound Truth)

橫軸 False Positive Rate (FPR) = 非目标像素中錯檢為目标的比例(越小越好)

縱軸 True Positive Rate (TPR) = 目标像素中正确檢出的比例(越大越好)

-

PR空間(面向檢測結果的正确性)

橫軸 Recall = TPR 實際目标像素中正确檢出的比例(越大越好)

縱軸 Precision = 檢測出的目标像素中正确的比例,檢測精度(越大越好)

總結圖

來自論文The Relationship Between Precision-Recall and ROC Curves

詳細讨論可以看論文,下面引出關鍵段落

2. Review of ROC and Precision-Recall

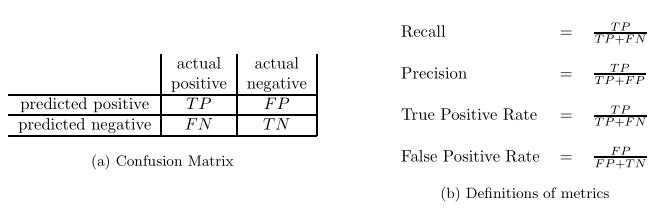

In a binary decision problem, a classifier labels ex-

amples as either positive or negative.The decision

made by the classifier can be represented in a struc-

ture known as a confusion matrix or contingency ta-

ble. The confusion matrix has four categories: True

positives (TP) are examples correctly labeled as posi-

tives. False positives (FP) refer to negative examples

incorrectly labeled as positive. True negatives (TN)

correspond to negatives correctly labeled as negative.

Finally, false negatives (FN) refer to positive examples

incorrectly labeled as negative.

A confusion matrix is shown in Figure 2(a). The con-

fusion matrix can be used to construct a point in either

ROC space or PR space. Given the confusion matrix,

we are able to define the metrics used in each space

as in Figure 2(b). In ROC space, one plots the False

Positive Rate (FPR) on the x-axis and the True Pos-

itive Rate (TPR) on the y-axis. The FPR measures

the fraction of negative examples that are misclassi-

fied as positive.The TPR measures the fraction of

positive examples that are correctly labeled. In PR

space, one plots Recall on the x-axis and Precision on

the y-axis. Recall is the same as TPR, whereas Pre-

cision measures that fraction of examples classified as

positive that are truly positive. Figure 2(b) gives the

definitions for each metric. We will treat the metrics

as functions that act on the underlying confusion ma-

trix which defines a point in either ROC space or PR

space. Thus, given a confusion matrix A, RECALL(A)

returns the Recall associated with A.